The biggest thing in the Kubernetes ecosystem recently is that KubeCon NA 2023 was successfully held. There was a lot of good content and a lot of breaking news at the conference.

At present, all relevant videos of the conference have been uploaded, and interested parties can watch them at :

This includes the protagonist of this article: Gateway API has released version v1.0, officially GA!

Before talking about whether Gateway API can become the future of traffic management in Kubernetes, let me first introduce some background.

Traffic Management in Kubernetes is actually divided into two main parts:

- North-south traffic

- East-west traffic

North-South traffic management

In the Kubernetes scenario, north-south traffic mainly refers to the traffic from outside the cluster to the cluster.

When the client wants to access the services deployed in the Kubernetes cluster, it needs to expose the services in the cluster. The most common way is through NodePort or Service of type LoadBalancer.

Both of these methods are relatively simple, but if the user has many services that need to be exposed outside the cluster, using these methods will waste a lot of ports (NodePort) or a lot of IPs (Loadbalancer), and because the Service API is The design positioning is relatively clear and does not include some LB or gateway capabilities, such as proxying based on domain names, or authentication, authentication and other capabilities.

Therefore, it would be unreasonable to expose cluster services only through Service.

Based on the above considerations, the community added Ingress as a built-in resource/API in Kubernetes v1.1 in 2015. You can see that its definition is very simple. For each Ingress resource, only Host and Path can be specified.

As well as the application’s Service, Port and protocol.

// An Ingress is a way to give services externally-reachable urls. Each Ingress is a

// collection of rules that allow inbound connections to reach the endpoints defined by

// a backend.

type Ingress struct {

unversioned.TypeMeta `json:",inline"`

// Standard object's metadata.

// More info: http://releases.k8s.io/HEAD/docs/devel/api-conventions.md#metadata

v1.ObjectMeta `json:"metadata,omitempty"`

// Spec is the desired state of the Ingress.

// More info: http://releases.k8s.io/HEAD/docs/devel/api-conventions.md#spec-and-status

Spec IngressSpec `json:"spec,omitempty"`

// Status is the current state of the Ingress.

// More info: http://releases.k8s.io/HEAD/docs/devel/api-conventions.md#spec-and-status

Status IngressStatus `json:"status,omitempty"`

}

// IngressSpec describes the Ingress the user wishes to exist.

type IngressSpec struct {

// TODO: Add the ability to specify load-balancer IP just like what Service has already done?

// A list of rules used to configure the Ingress.

// http://<host>:<port>/<path>?<searchpart> -> IngressBackend

// Where parts of the url conform to RFC 1738.

Rules []IngressRule `json:"rules"`

}

// IngressRule represents the rules mapping the paths under a specified host to the related backend services.

type IngressRule struct {

// Host is the fully qualified domain name of a network host, or its IP

// address as a set of four decimal digit groups separated by ".".

// Conforms to RFC 1738.

Host string `json:"host,omitempty"`

// Paths describe a list of load-balancer rules under the specified host.

Paths []IngressPath `json:"paths"`

}

// IngressPath associates a path regex with an IngressBackend.

// Incoming urls matching the Path are forwarded to the Backend.

type IngressPath struct {

// Path is a regex matched against the url of an incoming request.

Path string `json:"path,omitempty"`

// Define the referenced service endpoint which the traffic will be forwarded to.

Backend IngressBackend `json:"backend"`

}

// IngressBackend describes all endpoints for a given Service, port and protocol.

type IngressBackend struct {

// Specifies the referenced service.

ServiceRef v1.LocalObjectReference `json:"serviceRef"`

// Specifies the port of the referenced service.

ServicePort util.IntOrString `json:"servicePort,omitempty"`

// Specifies the protocol of the referenced service.

Protocol v1.Protocol `json:"protocol,omitempty"`

}

For most scenarios, this only reaches a basically usable state, but does not include other conventional functions (such as matching based on Request Header/Method, or Path Rewrite, etc.).

In addition, Ingress is just a set of API specifications and a resource. In order for it to take effect, a Controller is required to support it.

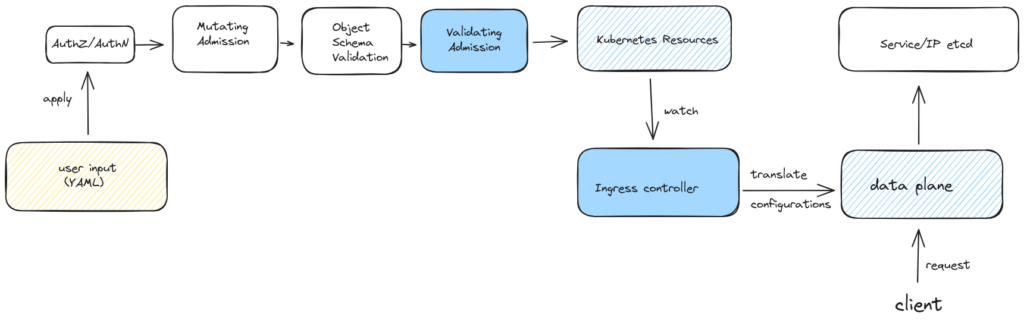

How Ingress works

As mentioned above, Ingress is a resource. After users create an Ingress resource in a Kubernetes cluster, they need to go through a series of processes:

- AuthZ/AuthN authentication and authentication;

- Admission Controller: The more typical ones are Mutating Admission and Validating Admission.

- After passing all the above steps, the Ingress resource can actually be written to etcd for storage

However, when Kubernetes introduced the Ingress resource, it did not implement its corresponding Controller like Deployment, Pod and other resources.

Therefore, it needs to have an Ingress controller to translate the rules defined in the Ingress resource into configurations that can be recognized by the data plane and take effect on the data plane.

Only in this way can we provide services for client requests. Currently, there are 30 Ingress controller implementations listed in the official Kubernetes documentation https://kubernetes.io/docs/concepts/services-networking/ingress-controllers/

At the same time, because the Ingress API only defines limited content, its expressiveness is not enough. In order to meet the needs of different scenarios, each Ingress controller has to create its own CRD (Custom Resource Definition) or add Ingress resources. annotations to achieve the corresponding requirements, taking the Kubernetes Ingress-NGINX project as an example:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/use-regex: "true"

nginx.ingress.kubernetes.io/rewrite-target: /$2

name: rewrite

namespace: default

spec:

ingressClassName: nginx

rules:

- host: rewrite.bar.com

http:

paths:

- path: /something(/|$)(.*)

pathType: ImplementationSpecific

backend:

service:

name: http-svc

port:

number: 80

To implement the “Rewrite rewrite.bar.com/something/new” operation to “rewrite.bar.com/new” in Kubernetes, you must incorporate specific annotations into the Ingress resource. Currently, the Kubernetes Ingress-NGINX project provides over 130 annotations to cater to diverse scenarios.

However, a challenge arises as the annotations used by different Ingress controllers are presently incompatible with one another.

This disparity makes it challenging for users to smoothly migrate between two distinct sets of Ingress Controllers.

The lack of standardization and compatibility between annotations across controllers complicates the process of transitioning from one controller to another, hindering the flexibility and ease of managing Ingress configurations in Kubernetes.

East-West traffic management

Next, let’s talk about east-west traffic in Kubernetes.

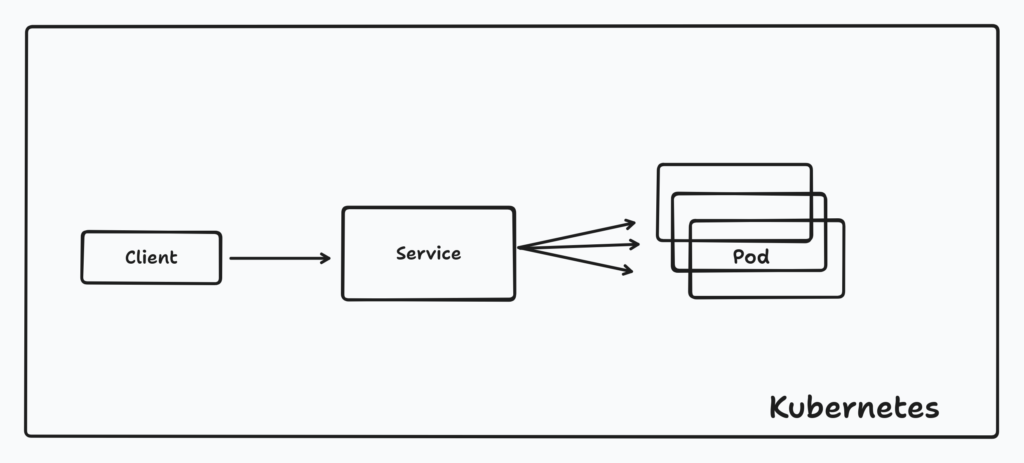

In the context of Kubernetes, east-west traffic primarily encompasses the communication between services within the cluster, focusing on the management of calls between these services.Typically, Service is the conventional choice for facilitating such interactions.

The application in the cluster finally sends the request to the target Pod through Service.

But we also know that usually the services in the cluster use the Overlay network, which has certain performance losses. Therefore, in some scenarios, we do not want to access other Pods through Service, but we hope to directly get the corresponding Pod IP for direct connection.

In this scenario, the role of Service can be thought of as just a DNS name, or a component that automatically manages resources/information such as endpoints/endpointslices.

➜ ~ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 9d

➜ ~ kubectl get endpoints

NAME ENDPOINTS AGE

kubernetes 131.83.127.119:6443 9d

➜ ~ kubectl get endpointslices

NAME ADDRESSTYPE PORTS ENDPOINTS AGE

kubernetes IPv4 6443 131.83.127.119 9d

Of course, when it comes to east-west traffic management in a Kubernetes cluster, we can easily think of service mesh projects such as Istio/Linkerd/Kuma. These projects complete east-west traffic management by creating their own CRDs.

For example, the following is a TrafficRoute configuration of Kuma, which can complete the cutting of east-west traffic.

apiVersion: kuma.io/v1alpha1

kind: TrafficRoute

mesh: default

metadata:

name: api-split

spec:

sources:

- match:

kuma.io/service: frontend_default_svc_80

destinations:

- match:

kuma.io/service: backend_default_svc_80

conf:

http:

- match:

path:

prefix: "/api"

split:

- weight: 90

destination:

kuma.io/service: backend_default_svc_80

version: '1.0'

- weight: 10

destination:

kuma.io/service: backend_default_svc_80

version: '2.0'

destination: # default rule is applied when endpoint does not match any rules in http section

kuma.io/service: backend_default_svc_80

version: '1.0'

The configurations between the various implementations are also incompatible with each other. Although the functional differences are not large, it may cause migration difficulties when users want to migrate.

After talking about traffic management in Kubernetes, let’s briefly summarize:

- Although the north-south traffic in Kubernetes has standardized Ingress, due to the weak expression ability of this resource, different vendors are incompatible with each other when implementing it, and the migration cost is high;

- There is no standard for east-west traffic management in Kubernetes, and each vendor needs to declare its own CRD to meet the requirements. But equally, they are also incompatible with each other.

What is Gateway API?

Why does Gateway API appear?

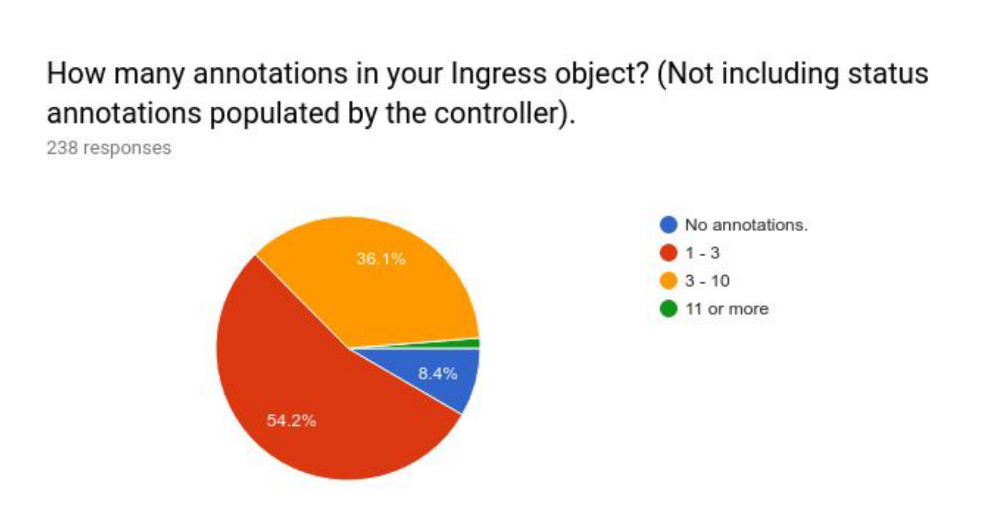

A statistics conducted by the Kubernetes community in 2018 investigated how many annotations added to the user’s Ingress resources to achieve specific needs were included. From the results, we can see that only 8% of users did not add additional annotations.

Although we did not conduct surveys in this area later, we have continued to add annotations functions based on user needs and feedback.

Now the proportion of users who have not added additional annotations will probably drop a lot.

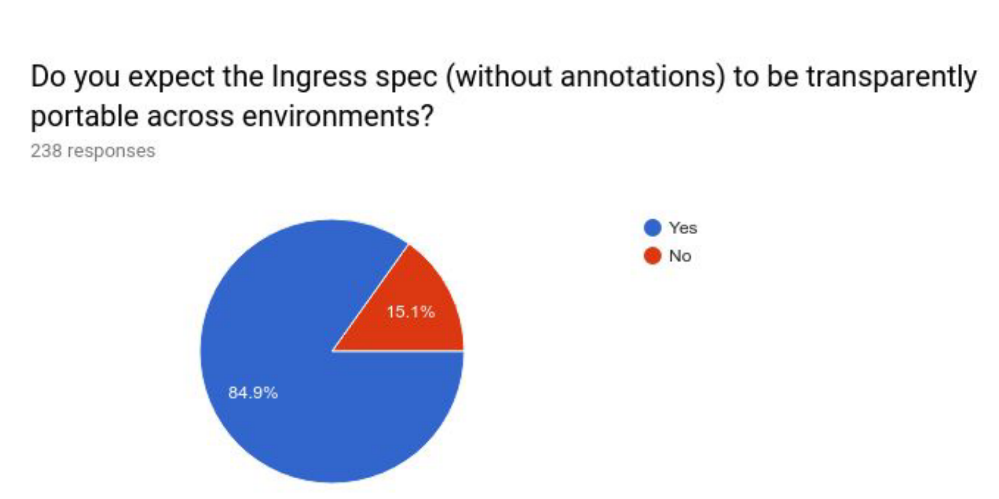

At the same time, it can be seen that nearly 85% of users expect Ingress to have good portability. In this way, users can more easily use the same configuration in different environments and clusters.

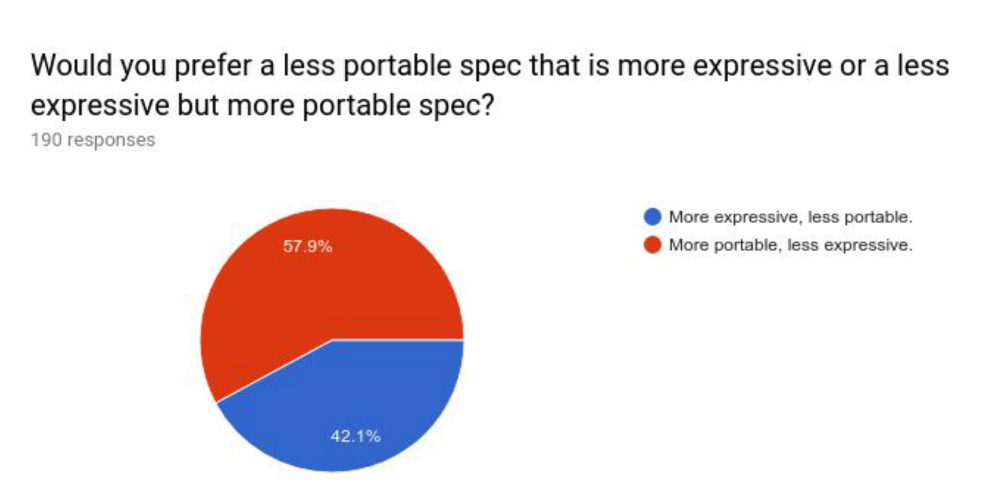

And compared to being more expressive, what users want more is portability.

Judging from the entire survey results, the situation of users regarding the Ingress API is: many people still use it, but there are still many improvements.

Therefore, at the 2019 San Diego KubeCon conference, many people from the Kubernetes Networking Special Interest Group (SIG Network) discussed the current status of Ingress, user needs and other related content, and reached an agreement to start the formulation of the Gateway API specification. For the specific story behind it, please refer to Gateway API: From Early Years to GA

Current status of Gateway API

Because when the Gateway API was designed, the Ingress resource had existed for 4 years and received feedback from many users in real environments.

Based on these experiences, the designed Gateway API has the following characteristics:

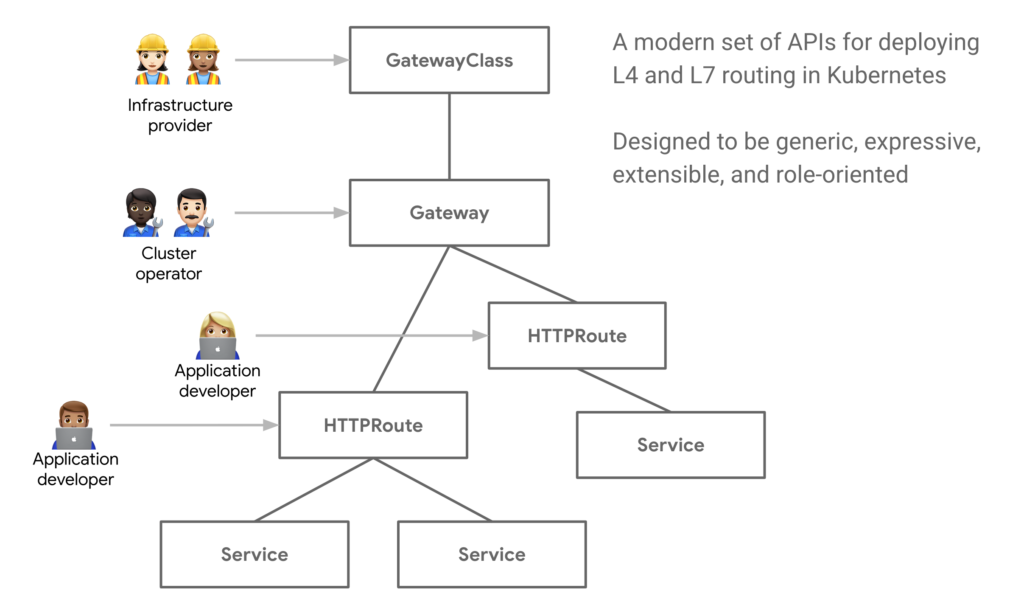

- role-oriented

The design of the Gateway API is role-oriented, specifically:

GatewayClass: This is provided/configured by the infrastructure provider and can be used to define some infrastructure-related capabilities. For example, the following configuration defines which controller is used to complete these capabilities and what IP address pools can be used.

apiVersion: gateway.networking.k8s.io/v1

kind: GatewayClass

metadata:

name: internet

spec:

controllerName: "example.net/gateway-controller"

parametersRef:

group: example.net/v1alpha1

kind: Config

name: internet-gateway-config

---

apiVersion: example.net/v1alpha1

kind: Config

metadata:

name: internet-gateway-config

spec:

ip-address-pool: internet-vips

Gateway: This is managed by cluster operation and maintenance, and some capabilities related to the Gateway itself can be defined, such as which ports to listen to, which protocols to support, etc.; For example, the following configuration defines a Kong Gateway that only listens to port 80 and supports the HTTP protocol.

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: kong-http

spec:

gatewayClassName: kong

listeners:

- name: proxy

port: 80

protocol: HTTP

HTTPRoute/TCPRoute/*Route: Routing rules can be managed and published by application developers, because application developers know which ports the application needs to expose, access paths, and which functions need to be matched by Gateway. For example, the following configuration defines that/echowhen the client accesses with a prefix, the request will be sent toechothe 1027 port of the Service.

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: echo

spec:

parentRefs:

- name: kong

rules:

- matches:

- path:

type: PathPrefix

value: /echo

backendRefs:

- name: echo

kind: Service

port: 1027

Moreover, we can see that there is nothing related to provider in this configuration, so its portability is very good, whether it is migrating between different environments or migrating between different Ingress Controllers. very convenient.

- More expressive

The Gateway API is more expressive than the Ingress, which means that it can achieve the desired effect through its native content defined in the spec without the need to add annotations like the Ingress resource.

As I mentioned the rewrite requirement when introducing Ingress earlier, it can be represented in the HTTPRoute resource of the Gateway API in the following way.

apiVersion: gateway.networking.k8s.io/v1beta1

kind: HTTPRoute

metadata:

name: http-filter-rewrite

spec:

hostnames:

- rewrite.example

rules:

- filters:

- type: URLRewrite

urlRewrite:

hostname: elsewhere.example

path:

type: ReplacePrefixMatch

replacePrefixMatch: /fennel

backendRefs:

- name: example-svc

weight: 1

port: 80

- Scalable

When designing GatewayAPI, some points have been reserved for expansion. For example, the following configuration can be spec.parametersRef associated with any custom resources to achieve more complex requirements.

apiVersion: gateway.networking.k8s.io/v1

kind: GatewayClass

metadata:

name: internet

spec:

controllerName: "example.net/gateway-controller"

parametersRef:

group: example.net/v1alpha1

kind: Config

name: internet-gateway-config

---

apiVersion: example.net/v1alpha1

kind: Config

metadata:

name: internet-gateway-config

spec:

ip-address-pool: internet-vips

In general, the Gateway API is a set of role-based, more expressive, and extensible specifications . Due to these characteristics, the configuration of Gateway API can be more portable, and migration in different providers will be easier.

Is Gateway API the future of traffic management in Kubernetes?

Gateway API has recently been officially GA, but only these three resources have reached GA GatewayClass, Gatewayand HTTPRoute there are many other resources that have been defined but are still in the beta stage, so it can currently be considered that it can mainly meet some conventional L7 needs.

GAMMA (Gateway API for Service Mesh) is also continuing to evolve and is used to define how to use the Gateway API to manage east-west traffic in Kubernetes.

According to the current development of Kubernetes and the evolution of the community, the Gateway API is the specification for the next generation of Kubernetes traffic management.

🔥 [20% Off] Linux Foundation Coupon Code for 2024 DevOps & Kubernetes Exam Sale [RUNNING NOW ]

Save 20% on all the Linux Foundation training and certification programs. This is a limited-time offer for this month. This offer is applicable for CKA, CKAD, CKS, KCNA, LFCS, PCA FINOPS, NodeJS, CHFA, and all the other certification, training, and BootCamp programs.

Check our last updated Kubernetes Exam Guides (CKAD , CKA , CKS) :

Conclusion

I personally think that there will be no other specifications to replace the Gateway API.

In addition, there is also an idea in the community to use the Gateway API to simplify the Service API mentioned many times in this article, so as to simplify the maintenance cost of the Service API.

Let us wait and see!